Kubernetes Cloud Native 实践 ( 一 ) 安装

2023-06-13 20:33:38 549

全文目录

Kubernetes Cloud Native 实践 ( 一 ) 安装

Kubernetes Cloud Native 实践 ( 二 ) 简单使用

Kubernetes Cloud Native 实践 ( 三 ) NFS/PV/PVC

Kubernetes Cloud Native 实践 ( 四 ) 中间件上云

Kubernetes Cloud Native 实践 ( 五 ) 应用上云

Kubernetes Cloud Native 实践 ( 六 ) 集成ELK日志平台

Kubernetes Cloud Native 实践 ( 七 ) 应用监控

Kubernetes Cloud Native 实践 ( 八 ) CICD集成

Kubernetes Cloud Native 实践 ( 九 ) 运维管理

Kubernetes Cloud Native 实践 ( 十 ) 相关问题

Kubernetes Cloud Native 实践 ( 十一 ) 运行截图

安装

- 创建三个虚拟机, 创建一个, 克隆两个. 系统ubuntu22.04. 2核8G, 磁盘40G, 还一台装了MySQL集群和ElasticSearch等服务, 共四个实例

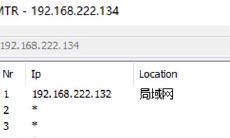

- 依次固定好ip, master: 192.168.222.129, slave1: 192.168.222.132, slave2: 192.168.222.133.

vim /etc/netplan/00-installer-config.yamlnetplan apply

network:

ethernets:

ens33:

dhcp4: no

addresses: [192.168.222.133/24]

routes:

- to: default

via: 192.168.222.2

nameservers:

addresses: [192.168.222.2]

version: 2

- 安装好docker

- 关闭 swap 内存.

vim /etc/fstab找到swap相关的行, 用#注释, 然后重启,free看swap都为0, 就是成功了 - k8s 要求 管理节点可以直接免密登录工作节点.

- 安装kubelet、kubeadm以及kubectl.

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF- 初始化 master 节点kubeadm init --kubernetes-version=1.23.1 --apiserver-advertise-address=192.168.222.129 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.10.0.0/16 --pod-network-cidr=10.244.0.0/16 ip改成master的ip如果当前步骤报错: [ERROR CRI]: container runtime is not running: output:vim /etc/containerd/config.toml将 disabled_plugin 更改为 enabled_pluginhttps://github.com/containerd/containerd/issues/8139#issuecomment-1478375386若过程中因为某些原因导致错误, 使用kubeadm reset重置, 再重新初始化完成后输出: kubeadm join 192.168.222.129:6443 --token 3b2pqq.fe3sjyd96ol0y564 --discovery-token-ca-cert-hash sha256:4188dca1cf2b7bc527ef2e6c4adbe631b36d1b6c388ecbfb145f7f2d1a768450复制下来留着slave加入集群用的

- 配置 kubectl 工具: mkdir -p /root/.kube && cp /etc/kubernetes/admin.conf /root/.kube/config通过下面两条命令测试 kubectl是否可用查看已加入的节点: kubectl get nodes查看集群状态: kubectl get cs

- master安装calicokubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/tigera-operator.yamlwet https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/custom-resources.yaml将cidr改成前面init时使用的网段kubectl create -f custom-resources.yaml

- 将 slave 节点加入网络在slave上重复step 2~6vim /etc/hostname 改为 slave1. vim /etc/hosts 修改 127.0.0.1 slave1kubeadm join 192.168.222.129:6443 --token 3b2pqq.fe3sjyd96ol0y564 --discovery-token-ca-cert-hash sha256:4188dca1cf2b7bc527ef2e6c4adbe631b36d1b6c388ecbfb145f7f2d1a768450 输入即可报错: [ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists 将这个ca.crt文件删了就行 (之前没改主机名执行了命令导致已经初始化一次了)出现This node has joined the cluster:即为成功, master可以再执行kubectl get nodes验证一下

- 安装dashboardwget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml在原来的line:40新增type: NodePort, 在targetPort下面新增nodePort: 30000kubectl apply -f recommended.yaml打开https://192.168.222.129:30000/, 浏览器直接输入thisisunsafe创建配置文件 dashboard-adminuser.yaml, 内容放在后面kubectl apply -f dashboard-adminuser.yamlkubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"复制输出的文本到浏览器登录cat <<EOF > dashboard-adminuser.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard EOF

- k8s自带的dashboard不太方便, 可以使用kuboard

- docker run -d --restart=unless-stopped --name=kuboard -p 801:80/tcp -p 10081:10081/tcp -e KUBOARD_ENDPOINT="http://192.168.222.129:801" -e KUBOARD_AGENT_SERVER_TCP_PORT="10081" -v /usr/local/kuboard-data:/data eipwork/kuboard:v3

- 初始化后再在master节点安装metrics-server和metrics-scraper. kuboard会给出yaml文件, kubectl create -f xxxx.yaml即可